Last year, Apple launched Core ML—a segue into the world of machine learning for Apple developers. Before that, we were already using machine learning with technologies like AutoCorrect, Siri, and a predictive keyboard, but Core ML brought a new level of power and flexibility into the hands of developers. During WWDC 18, Apple announced Core ML 2, and in this article you’ll be learning about what’s new.

At a Glance

Before we dive into the changes that Core ML 2 brings, let’s briefly discuss what Core ML really is. More specifically, let’s learn a little bit about the nuances of machine learning. Core ML is a framework which lets iOS and macOS developers easily and efficiently implement machine learning into their apps.

What Is Machine Learning?

Machine learning is the use of statistical analysis to help computers make decisions and predictions based on characteristics found in that data. In other words, it’s the act of having a computer form an abstract understanding of an existing data set (called a “model”), and using that model to analyze newer data.

How Does It Work?

Using low-level technologies in Apple platforms, Core ML is able to offer quick and efficient machine learning tools to implement in your apps. This works by using Metal and Accelerate to take full advantage of the GPU and CPU on the device, making for seamless speed. This also enables machine learning to work on the device instead of needing access to the internet for each request.

1. Batch Prediction

Predicts output feature values from the given a batch of input feature values.—Apple Documentation

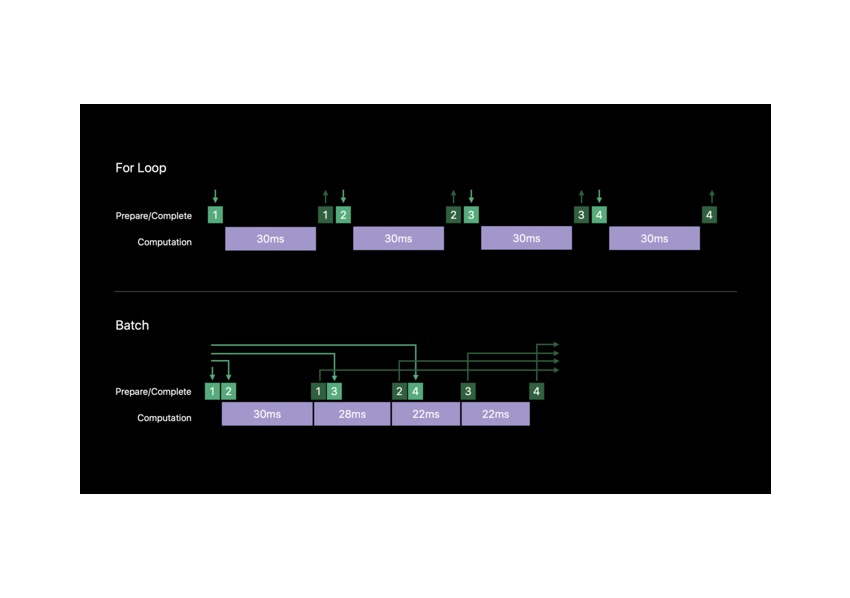

Batch prediction is worth noting since Apple didn’t have it in the first version of Core ML. At a glance, batch prediction allows you to run your model on a set of data and get a set of outputs.

If you had a Core ML model to classify images on whether they contained flowers or trees and you wanted to classify multiple images, let’s say 300, you would need to write a for-loop to iterate through each of the images and regularly classify each using your model.

In Core ML 2, however, we get what Apple calls the Batch Predict API. This allows us to make multiple predictions on a set of data without having to use for-loops. If you wanted to use it, you’d just call:

modelOutputs = model.prediction(from: modelInputs, options: options)

modelInputs, in this example, is the set of inputs that you’d like to run your model on, but we won’t be covering what the options are in this article. If you want to learn more about how to use the Batch Predict API, feel free to refer to Apple’s documentation. While this may not seem like a big deal at first, it actually improves the performance of the model by a whopping 30%!

2. Training Models

Use Create ML with familiar tools like Swift and macOS playgrounds to create and train custom machine learning models on your Mac. You can train models to perform tasks like recognizing images, extracting meaning from text, or finding relationships between numerical values.—Apple Documentation

While Core ML has always been a powerful platform, it wasn’t always easy to create your own models. In the past, it was almost necessary to be familiar with Python in order to create even the most basic models. With Core ML 2, we also got Create ML, an easy way for you to create your own Core ML models.

Create ML isn’t only limited to image-based models, though. Without even creating a real Xcode project, you can train different types of models in a playground. In addition, you can also test these models and export them for use in any application.

Image Classifier

With a pattern such as a convolutional neural network, Create ML can help you create a custom image classifier to identify certain characteristics from a given image. For example, you could train it to distinguish between a tree and a flower.

Or you could use it for more complex applications such as identifying the type of plant or a specific breed of dog. Based on the number of images, the accuracy increases, as with any machine learning model.

Check out my post here on Envato Tuts+ to learn how to create an image classifier in Create ML.

Machine LearningTraining an Image Classification Model With Create ML

Machine LearningTraining an Image Classification Model With Create ML

Text Classifier (NLP)

In addition to being a tool for image classification, Create ML can also help you create text-based machine learning models. For example, you could create a model which tells you the sentiment in a particular sentence. Or you could make a spam filter which uses characteristics of the text (i.e. the words used) to check whether a string is “spam” or “not spam”.

Tabular Data Classifier

Sometimes, several data points or features can be helpful when trying to classify data. Spreadsheets are a very strong example of this, and Create ML can create Core ML models based on CSV files as well.

Now, your Excel spreadsheets can be used to make a model which predicts the stock market based on buying and selling patterns; or maybe, it predicts the genre of the book based on the author’s name, the title, and the number of pages.

3. Model Size Reductions

Bundling your machine learning model in your app is the easiest way to get started with Core ML. As models get more advanced, they can become large and take up significant storage space. For a neural-network based model, consider reducing its footprint by using a lower precision representation for its weight parameters.—Apple Documentation

With the introduction of Core ML 2 and iOS 12, developers are now able to reduce the size of their already-trained models by over 70% from the original size. Model size can be a real issue—you may have noticed that some of your apps get larger and larger with each update!

This comes as no surprise because developers are constantly making their machine learning models better, and of course, as stated in the developer documentation, more advanced models take up more storage space, making the actual app bigger. If the app gets too big, some users may stop downloading updates and may discontinue using these apps.

Fortunately, though, you now have the ability to quantize a model, which allows its size to decrease significantly, based on the amount of quality you’re willing to give up. Quantization isn’t the only way to go; there are other ways, too!

Convert to Half-Precision

Core ML Tools provides developers with a way to reduce the weights to half-size. If you don’t know what weights are yet, it’s okay; all you need to know is that they are directly related to the precision of the model. As you may have guessed, half-size equates to half-precision.

Models before Core ML 2 only had the option to be represented with 32 bits, which is great for precision, but not ideal for storage size. Half-precision reduces this to just 16 bits and can greatly reduce the size of the model. If you want to do this to your models, visit the documentation for a comprehensive guide.

Download and Compile

It’s great to have your models on your device because it results in greater security and performance, and it doesn’t depend on a solid internet connection. However, if your app uses multiple models to create a seamless experience for the user, all of these models may not be necessary at once.

You can also download these models on an as-needed basis and compile them on the spot instead of bundling them with your app and increasing the amount of space your app takes up on your user’s device. You can even download these models and store them on your user’s device temporarily to avoid downloading the same model several times.

Conclusion

In this article, you learned about the latest and greatest technologies in Core ML and how it stacks up against the previous year’s version of the API. While you’re here on Envato Tuts+, check out some of our other great machine learning content!

Machine LearningTraining an Image Classification Model With Create ML

Machine LearningTraining an Image Classification Model With Create ML SwiftGet Started With Natural Language Processing in iOS 11

SwiftGet Started With Natural Language Processing in iOS 11 Machine LearningGet Started With Image Recognition in Core ML

Machine LearningGet Started With Image Recognition in Core ML iOS SDKConversation Design User Experiences for SiriKit and iOS

iOS SDKConversation Design User Experiences for SiriKit and iOS

Powered by WPeMatico