Wouldn’t it be great if an Android app could see and understand its surroundings? Can you imagine how much better its user interface could be if it could look at its users and instantly know their ages, genders, and emotions? Well, such an app might seem futuristic, but it’s totally doable today.

With the IBM Watson Visual Recognition service, creating mobile apps that can accurately detect and analyze objects in images is easier than ever. In this tutorial, I’ll show you how to use it to create a smart Android app that can guess a person’s age and gender and identify prominent objects in a photograph.

Prerequisites

To be able to follow this tutorial, you must have:

- an IBM Bluemix account

- Android Studio 3.0 Canary 8 or higher

- and a device or emulator running Android 4.4 or higher

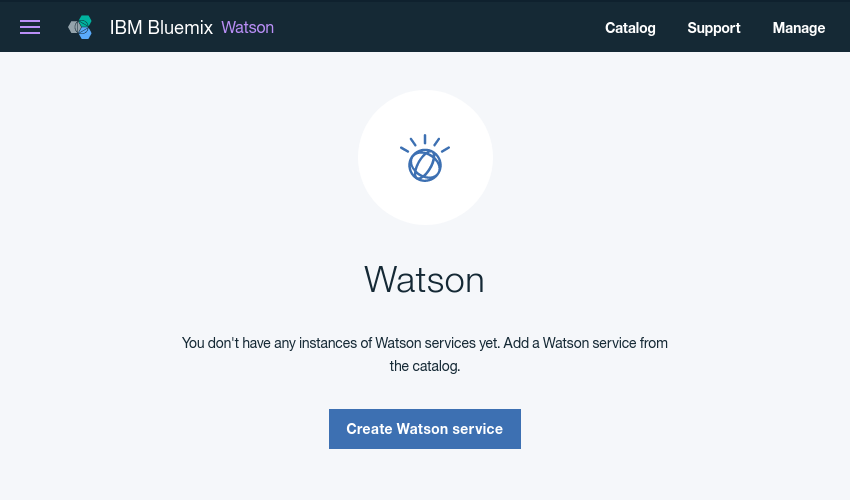

1. Activating the Visual Recognition Service

Like all Watson services, the Visual Recognition service too must be manually activated before it can be used in an app. So log in to the IBM Bluemix console and navigate to Services > Watson. In the page that opens, press the Create Watson service button.

From the list of available services shown next, choose Visual Recognition.

You can now give a meaningful name to the service and press the Create button.

Once the service is ready, an API key will be generated for it. You can view it by opening the Service credentials tab and pressing the View Credentials button.

2. Project Setup

In this tutorial, we’ll be using the Watson Java and Android SDKs while interacting with the Visual Recognition service. We’ll also be using the Picasso library in order to fetch and display images from the Internet. Therefore, add the following implementation dependencies to your app module’s build.gradle file:

implementation 'com.ibm.watson.developer_cloud:visual-recognition:3.9.1' implementation 'com.ibm.watson.developer_cloud:android-sdk:0.4.2' implementation 'com.squareup.picasso:picasso:2.5.2'

To be able to interact with Watson’s servers, your app will need the INTERNET permission, so request for it in your project’s AndroidManifest.xml file.

Additionally, the app we’ll be creating today will need access to the device’s camera and external storage media, so you must also request for the CAMERA and WRITE_EXTERNAL_STORAGE permissions.

Lastly, add your Visual Recognition service’s API key to the strings.xml file.

a1234567890bcdefe

3. Initializing a Visual Recognition Client

The Watson Java SDK exposes all the features the Visual Recognition service offers through the VisualRecognition class. Therefore, you must now initialize an instance of it using its constructor, which expects both a version date and the API key as its arguments.

While using the Visual Recognition service, you’ll usually want to take pictures with the device’s camera. The Watson Android SDK has a CameraHelper class to help you do so. Although you don’t have to, I suggest you also initialize an instance of it inside your activity’s onCreate() method.

private VisualRecognition vrClient;

private CameraHelper helper;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// Initialize Visual Recognition client

vrClient = new VisualRecognition(

VisualRecognition.VERSION_DATE_2016_05_20,

getString(R.string.api_key)

);

// Initialize camera helper

helper = new CameraHelper(this);

}

At this point, you have everything you need to start analyzing images with the service.

4. Detecting Objects

The Visual Recognition service can detect a large variety of physical objects. As input, it expects a reasonably well-lit picture whose resolution is at least 224 x 224 pixels. For now, let’s use the device camera to take such a picture.

Step 1: Define a Layout

The user must be able to press a button to take the picture, so your activity’s layout XML file must have a Button widget. It must also have a TextView widget to list the objects detected.

Optionally, you can throw in an ImageView widget to display the picture.

In the above code we’ve added an on-click event handler to the Button widget. You can generate a stub for this widget in code it by clicking on the light bulb shown beside it.

public void takePicture(View view) {

// More code here

}

Step 2: Take a Picture

You can take a picture by simply calling the CameraHelper object’s dispatchTakePictureIntent() method, so add the following code inside the event handler:

helper.dispatchTakePictureIntent();

The above method uses the device’s default camera app to take the picture. That means to gain access to the picture taken, you must override your activity’s onActivityResult() method and look for results whose request code is REQUEST_IMAGE_CAPTURE. Here’s how you can do that:

@Override

protected void onActivityResult(int requestCode,

int resultCode,

Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if(requestCode == CameraHelper.REQUEST_IMAGE_CAPTURE) {

// More code here

}

}

Once you find the right result, you can extract the picture from it in the form of a Bitmap object using the getBitmap() method of the CameraHelper class. You can also get the absolute path of the picture using the getFile() method. We’ll need both the bitmap and the absolute path, so add the following code next:

final Bitmap photo = helper.getBitmap(resultCode); final File photoFile = helper.getFile(resultCode);

If you chose to add the ImageView widget to your layout, you can display the picture now by directly passing the bitmap to its setImageBitmap() method.

ImageView preview = findViewById(R.id.preview); preview.setImageBitmap(photo);

Step 3: Classify the Picture

To detect items in the picture, you must pass the image as an input to the classify() method of the VisualRecognition object. Before you do so, however, you must wrap it in a ClassifyImagesOptions object, which can be created using the ClassifyImagesOptions.Builder class.

The return value of the classify() method is a ServiceCall object, which supports both synchronous and asynchronous network requests. For now, let’s call its execute() method to make a synchronous request. Of course, because network operations are not allowed on the UI thread, you must remember to do so from a new thread.

AsyncTask.execute(new Runnable() {

@Override

public void run() {

VisualClassification response =

vrClient.classify(

new ClassifyImagesOptions.Builder()

.images(photoFile)

.build()

).execute();

// More code here

}

});

The classify() method is built to handle multiple pictures at once. Consequently, its response is a list of classification details. Because we are currently working with a single picture, we just need the first item of the list. Here’s how you can get it:

ImageClassification classification =

response.getImages().get(0);

VisualClassifier classifier =

classification.getClassifiers().get(0);

The Visual Recognition service treats each item it has detected as a separate class of type VisualClassifier.VisualClass. By calling the getClasses() method, you can get a list of all the classes.

Each class has, among other details, a name and a confidence score associated with it. The following code shows you how to loop through the list of classes and display the names of only those whose scores are greater than 70% in the TextView widget.

final StringBuffer output = new StringBuffer();

for(VisualClassifier.VisualClass object: classifier.getClasses()) {

if(object.getScore() > 0.7f)

output.append("<")

.append(object.getName())

.append("> ");

}

runOnUiThread(new Runnable() {

@Override

public void run() {

TextView detectedObjects =

findViewById(R.id.detected_objects);

detectedObjects.setText(output);

}

});

Note that the above code uses the runOnUiThread() method because the contents of the TextView widget can be updated only from the UI thread.

If you run the app now and take a picture, you will be able to see Watson’s image classification working.

5. Analyzing Faces

The Visual Recognition service has a dedicated method to process human faces. It can determine the age and gender of a person in any photograph. If the person’s famous, it can also name him or her.

Step 1: Define a Layout

Analyzing faces with the Visual Recognition service is not too different from classifying objects. So you are free to reuse the layout you created earlier. However, to introduce you to a few more features the service offers, I’m going to create a new layout, this one with a slightly different functionality.

This time, instead of taking pictures using the camera and passing them to the service, let’s directly pass an image URL to it. To allow the user to type in a URL and start the analysis, our layout will need an EditText widget and a Button widget. It will also need a TextView widget to display the results of the analysis.

I suggest you also add an ImageView widget to the layout so that the user can see the image the URL points to.

Step 2: Display the Image

Inside the on-click handler of the Button widget, you can call the getText() method of the EditText widget to determine the image URL the user typed in. Once you know the URL, you can simply pass it to Picasso’s load() and into() methods to download and display the image in the ImageView widget.

EditText imageURLInput = findViewById(R.id.image_url); final String url = imageURLInput.getText().toString(); ImageView preview = findViewById(R.id.preview); Picasso.with(this).load(url).into(preview);

Step 3: Run Face Analysis

To run face analysis on the URL, you must use the detectFaces() method of the VisualRecognition client. Just like the classify() method, this method too needs a VisualRecognitionOptions object as its input.

Because you already know how to use the execute() method to make synchronous requests, let’s now call the enqueue() method instead, which runs asynchronously and needs a callback. The following code shows you how:

vrClient.detectFaces(new VisualRecognitionOptions.Builder()

.url(url)

.build()

).enqueue(new ServiceCallback() {

@Override

public void onResponse(DetectedFaces response) {

// More code here

}

@Override

public void onFailure(Exception e) {

}

});

As you can see in the above code, inside the onResponse() method of the callback object, you have access to a DetectedFaces object, which contains a list of face analysis results. Because we used a single image as our input, we’ll be needing only the first item of the list. By calling its getFaces() method, you get a list of all the Face objects detected.

Listfaces = response.getImages().get(0).getFaces();

Each Face object has a gender and age range associated with it, which can be accessed by calling the getGender() and getAge() methods.

The getGender() method actually returns a Gender object. You must call its own getGender() method to get the gender as a string, which will either be “MALE” or “FEMALE”. Similarly, the getAge() method returns an Age object. By calling its getMin() and getMax() methods, you can determine the approximate age of the face in years.

The following code shows you how to loop through the list of Face objects, generate a string containing the genders and ages of all the faces, and display it in the TextView widget:

String output = "";

for(Face face:faces) {

output += "<" + face.getGender().getGender() + ", " +

face.getAge().getMin() + " - " +

face.getAge().getMax() + " years old>n";

}

TextView personDetails = findViewById(R.id.person_details);

personDetails.setText(output);

Here’s a sample face analysis result:

Conclusion

The Watson Visual Recognition service makes it extremely easy for you to create apps that are smart and aware of their surroundings. In this tutorial, you learned how to use it with the Watson Java and Android SDKs to detect and analyze generic objects and faces.

To learn more about the service, you can refer to the official documentation.

And be sure to check out some of our other posts about machine learning here on Envato Tuts+!

Android ThingsAndroid Things and Machine Learning

Android ThingsAndroid Things and Machine Learning Android ThingsAndroid Things: Creating a Cloud-Connected Doorman

Android ThingsAndroid Things: Creating a Cloud-Connected Doorman Android SDKHow to Use Google Cloud Machine Learning Services for Android

Android SDKHow to Use Google Cloud Machine Learning Services for Android Android SDKCreate an Intelligent App With Google Cloud Speech and Natural Language APIs

Android SDKCreate an Intelligent App With Google Cloud Speech and Natural Language APIs Android SDKHow to Use the Google Cloud Vision API in Android Apps

Android SDKHow to Use the Google Cloud Vision API in Android Apps

Powered by WPeMatico