Overview

HTML is almost intuitive. CSS is a great advancement that cleanly separates the structure of a page from its look and feel. JavaScript adds some pizazz. That’s the theory. The real world is a little different.

In this tutorial, you’ll learn how the content you see in the browser actually gets rendered and how to go about scraping it when necessary. In particular, you’ll learn how to count Disqus comments. Our tools will be Python and awesome packages like requests, BeautifulSoup, and Selenium.

When Should You Use Web Scraping?

Web scraping is the practice of automatically fetching the content of web pages designed for interaction with human users, parsing them, and extracting some information (possibly navigating links to other pages). It is sometimes necessary if there is no other way to extract the necessary information. Ideally, the application provides a dedicated API for accessing its data programmatically. There are several reasons web scraping should be your last resort:

- It is fragile (the web pages you’re scraping might change frequently).

- It might be forbidden (some web apps have policies against scraping).

- It might be slow and expansive (if you need to fetch and wade through a lot of noise).

Understanding Real-World Web Pages

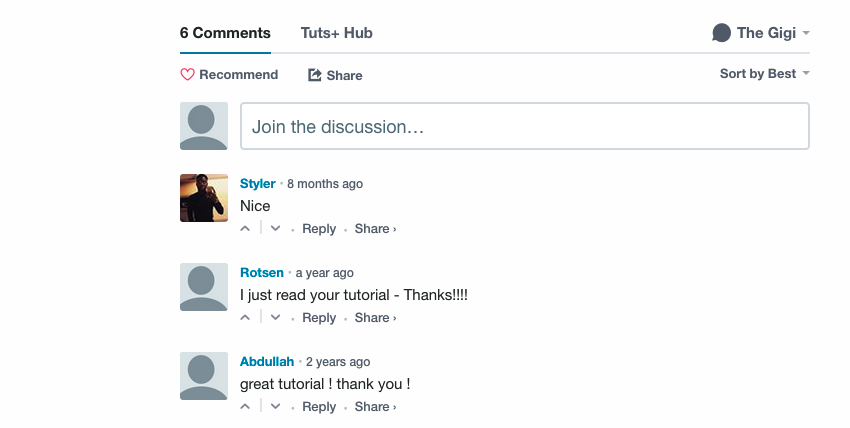

Let’s understand what we are up against, by looking at the output of some common web application code. In the article Introduction to Vagrant, there are some Disqus comments at the bottom of the page:

In order to scrape these comments, we need to find them on the page first.

View Page Source

Every browser since the dawn of time (the 1990s) has supported the ability to view the HTML of the current page. Here is a snippet from the view source of Introduction to Vagrant that starts with a huge chunk of minified and uglified JavaScript unrelated to the article itself. Here is a small portion of it:

Here is some actual HTML from the page:

This looks pretty messy, but what is surprising is that you will not find the Disqus comments in the source of the page.

The Mighty Inline Frame

It turns out that the page is a mashup, and the Disqus comments are embedded as an iframe (inline frame) element. You can find it out by right-clicking on the comments area, and you’ll see that there is frame information and source there:

That makes sense. Embedding third-party content as an iframe is one of the primary reasons to use iframes. Let’s find the