And that’s a wrap! This year’s Google I/O has come to a close, and as usual there were lots of announcements and releases for developers to get excited about.

Let’s look at some of the biggest news from Google I/O 2018.

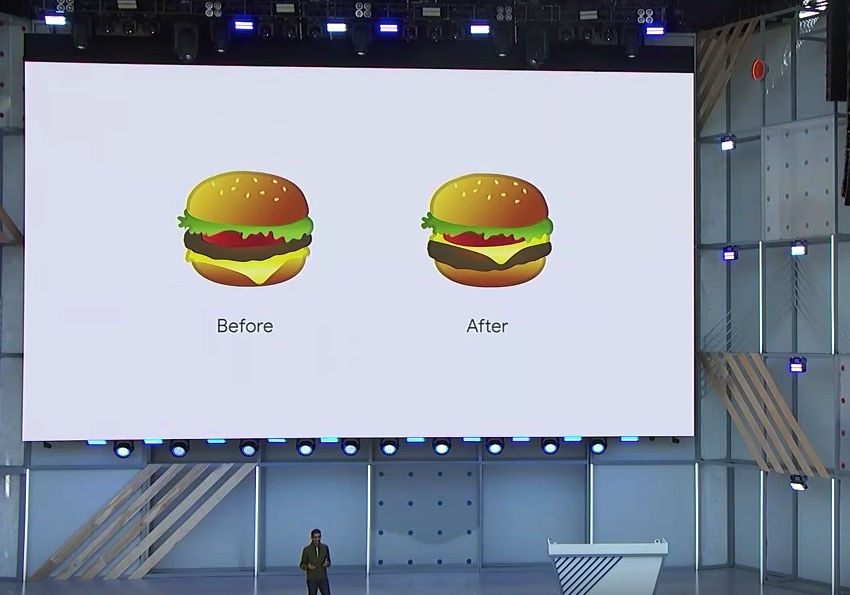

A Major Fix for One of Google’s Core Products

Google kicked things off with a huge announcement, within the first few seconds of their opening keynote. “It came to my attention that we had a major bug in one of our core products,” said Google CEO Sundar Pichai. “We got the cheese wrong in our burger emoji.” Now fixed!

Android P Is Now in Beta (But Still Nameless)

While Android P’s name is still shrouded in mystery, Google I/O 2018 did bring us the first beta of Android P, plus a closer look at some of its key features:

- Adaptive battery. Battery life is a concern for all mobile users, so Android P is introducing a new feature that will optimize battery usage for the individual user. Based on a person’s habits, the adaptive battery will place running apps into groups ranging from “active” to “rare,” where each group has different restrictions. If your app is optimized for Doze, App Standby, and Background Limits, then Adaptive Battery should work out of the box.

- App Actions. This new feature uses machine learning to analyse the user’s context and recent actions, and then presents your app to the user at the moment when they need it the most. App Actions will make your app visible to users across multiple Google and Android surfaces, such as the Google Search app, the Play Store, Google Assistant, and the Launcher, plus a variety of Assistant-enabled devices, including speakers and smart displays. To take advantage of this feature, you’ll need to register your app to handle one or more common intents.

- Slices. These are customisable UI templates that will allow users to engage with your app outside of the full-screen experience, across Android and Google surfaces, such as the Google Assistant. You can create slices that include a range of dynamic content, including text, images, videos, live data, scrolling content, deep links, and even interactive controls such as toggles and sliders. Although slices are a new feature for Android P, they will eventually be available all the way back to Android KitKat, thanks to project Jetpack (which we’ll be looking at later in this article).

The first beta of Android P is now available for Sony Xperia XZ2, Xiaomi Mi Mix 2S, Nokia 7 Plus, Oppo R15 Pro, Vivo X21, OnePlus 6, Essential PH‑1, Pixel, and Pixel 2. To check whether your device is eligible for this beta, head over to the Android Beta Program website.

More Kotlin Extensions

One of the biggest moments at last year’s keynote came when Director of Product Management, Stephanie Cuthbertson, announced that Kotlin would become an officially supported language for Android development, so we were always going to see more Kotlin-related news at Google I/O 2018.

Kotlin KTX is one interesting new Kotlin project that got some attention during this year’s I/O. This new project is a collection of modules consisting of extensions that optimize the Android platform for Kotlin. Using these extensions, you can make lots of minor improvements to your code. For example, if you wanted to edit SharedPreferences using vanilla Kotlin, then your code might look something like this:

sharedPreferences.edit()

.putBoolean("key", value)

.apply()

With the help of the KTX’s androidx.core:core-ktx module, you can now write code that looks more like this:

sharedPreferences.edit {

putBoolean("key", value)

}

Android KTX is currently in preview, so you should expect some breaking changes before it reaches its first stable release. However, if you want to experiment with this early version, then the following modules are available today:

androidx.core:core-ktxandroidx.fragment:fragment-ktxandroidx.palette:palette-ktxandroidx.sqlite:sqlite-ktxandroidx.collection:collection-ktxandroidx.lifecycle:lifecycle-viewmodel-ktxandroidx.lifecycle:lifecycle-reactivestreams-ktxandroid.arch.navigation:navigation-common-ktxandroid.arch.navigation:navigation-fragment-ktxandroid.arch.navigation:navigation-runtime-ktxandroid.arch.navigation:navigation-testing-ktxandroid.arch.navigation:navigation-ui-ktxandroid.arch.work:work-runtime-ktx

To start working with Android KTX, you’ll need to add a dependency for each module that you want to use. For example:

dependencies {

implementation 'androidx.fragment:fragment-ktx1.0.0-alpha1'

}

Android Jetpack

Android Jetpack is a new set of libraries, tools and architectural guidance that aims to eliminate boilerplate code by automatically managing activities such as background tasks, navigation, and lifecycle management.

Jetpack is divided into four categories:

- Foundation. This includes components for core system capabilities, such as App Compat and Android KTX.

- UI. This is the category for UI-focused components, such as Fragment and Layout, but also components that extend beyond smartphones, including Auto, TV, and Wear OS by Google.

- Architecture. This is where you’ll find modules to help you manage the UI component lifecycle and handle data persistence, including Data Binding, LifeCycles, LiveData, Room, and ViewModel.

- Behaviour. This category contains modules such as Permissions, Notifications, and the newly announced Slices.

The easiest way to get started with Jetpack is to download Android Studio 3.2 or higher and then create a project using the Activity & Fragment + ViewModel template, which is designed to help you incorporate Jetpack into your app.

A New Build of Android Studio 3.2 Canary

No Google I/O would be complete without some Android Studio news! This year, we got a new preview of Android 3.2, which introduced the following features:

A New Navigation Editor

Your app’s navigation is crucial to delivering a good user experience. For the best results, you should carefully design your navigation so users can complete each task in as few screens as possible.

To help you create a navigational structure that feels intuitive and effortless, Jetpack includes a Navigation Architecture Component, and Android Studio 3.2 is supporting this component with a new graphical navigation editor.

The navigation editor lets you visualise and perfect your app’s navigational structure, although the downside is that you can’t just use it out of the box: you’ll need to set up the Navigation Architecture Component and create a Navigation graph XML resource file, before you can access this editor.

Goodbye Support Library, Hello AndroidX

Android’s Support Library is invaluable, but due to the way it’s evolved over the years, it isn’t exactly intuitive, especially for newcomers. For example, the Support Library includes many components and packages named v7, even though API 14 is the minimum that most of these libraries support.

To help clear up this confusion, Google is refactoring the Support Library into a new AndroidX library that will feature simplified package names and Maven groupIds and artifactIds that better reflect the library’s contents. For more information about the mappings between old and new classes, check out the AndroidX refactoring map.

Android Studio 3.2 supports this migration with a new refactoring feature, which you can access by Control-clicking your project and selecting Refactor > Refactor to AndroidX. This will update your code, resources, and Gradle configuration to reference the Maven artifacts and refactored classes.

According to the Google blog, they plan to continue updating the android.support-packaged libraries throughout the P Preview timeframe, to give the community plenty of time to migrate to AndroidX.

Reduce Your APK Size With Android App Bundle

Since the Android Marketplace launched in March 2012, average app size has quintupled, and there’s evidence to suggest that for every 6 MB increase in APK size, you can expect to see a 1% decrease in installation rates.

To help you get your APK size under control, Android Studio 3.2 introduces the concept of Android App Bundles. Under this new model, you build a single artifact that includes all the code, assets, and libraries that your app needs for every device, but the actual APK generation is performed by Google Play’s Dynamic Delivery service.

This new service generates APKs that are optimized for each specific device configuration, so the user gets a smaller download containing only the code and resources that are required by their particular device, and you don’t have to worry about building, signing, uploading and managing multiple APKs.

If you already organize your app’s code and resources according to best practices, then creating an App Bundle in Android Studio 3.2 is fairly straightforward:

- Choose Build > Build Bundle(s) / APK(s) from the Android Studio toolbar.

- Select Build Bundle(s).

This generates an app bundle and places it in your project-name/module-name/build/outputs/bundle/ directory.

To generate a signed app bundle that you can upload to the Google Play console:

- Select Build > Generate Signed Bundle/APK from the Android Studio toolbar.

- Select Android App Bundle and then click Next.

- In the Module dropdown menu, select your app’s base module.

- Complete the rest of the signing dialogue, as normal, and Android Studio will generate your signed bundle.

When you upload your App Bundle, the Play Console automatically generates split APKs and multi-APKs for all the device configurations that your application supports. If you’re curious, then you can see exactly what artifacts it’s created, using the new App Bundle Explorer:

- Sign into the Google Play Console.

- Select your application.

- In the left-hand menu, select Release management > App releases > Manage.

- Select the bundle that you want to explore.

- Click Open in bundle explorer.

You can also add dynamic feature modules to your App Bundle, which contain features and assets that the user won’t require at install time but may need to download at a later date. Eventually, Google also plans to add instant-enable support to the App Bundle, which will allow users to launch your app’s module from a link, without installation, in a manner that sounds very reminiscent of Android Instant Apps.

Populate Your Layouts With Sample Data

When your layout includes lots of runtime data, it can be difficult to visualise how your app will eventually look. Now, whenever you add a View to your layout, you’ll have the option to populate it with a range of sample data.

To see this feature in action:

- Open Android Studio’s Design tab.

- Drag a

RecyclerViewinto your app’s layout. - Make sure your

RecyclerViewis selected. - In the Attributes panel, find the RecyclerView / listitem section and give the corresponding More button a click (where the cursor is positioned in the following screenshot).

This launches a window where you can choose from a variety of sample data.

Android Profiler Gets an Energy Profiler

The Android Profiler has also been updated with lots of new features, most notably an Energy Profiler that displays a graph of your app’s estimated energy usage.

New Lint Checks for Java/Kotlin Interoperability

To make sure your Java code plays nicely with your Kotlin code, Android Studio 3.2 introduces new Lint checks that enforce the best practices described in the Kotlin Interop Guide.

To enable these checks:

- Choose Android Studio > Preferences from the Android Studio toolbar.

- Select Editor from the left-hand menu.

- Select Inspections.

- Expand the Kotlin section, followed by the Java interop issues section.

- Select the inspections that you want to enable.

Why Won’t Android Studio Detect My Device?

At some point, we’ve all experienced the pain of connecting our Android smartphone or tablet to our development machine, only for Android Studio to refuse to recognise its existence. Android Studio 3.2 introduces a Connection Assistant that can help you troubleshoot these frustrating connection issues.

To launch the Assistant, select Tools > Connection Assistant from the Android Studio toolbar, and then follow the onscreen instructions.

Actions for the Assistant

If you’ve built Actions for the Assistant, then Google I/O saw the launch of several new and expanded features that can help you get more out of your Actions.

Customise Actions With Your Own Branding

It’s now possible to create a custom theme for your Actions. For example, you could change an Action’s background image and typeface to complement your app’s branding.

To create themed Actions:

- Head over to the Actions console (which has also undergone a redesign).

- Open the project where you want to implement your custom theme.

- In the left-hand menu, select Theme customization.

This takes you to a screen where you can make the following customisations:

- Background colour. A colour that’s applied to the background of your Action cards. Wherever possible you should use a light colour, as this makes the card’s content easier to read.

- Primary colour. A colour that’s applied to header text, such as card titles, and UI components such as buttons. It’s recommended that you use a dark colour, as these provide the greatest contrast with the card’s background.

- Typography. A font family that’s applied to your card’s primary text, such as titles.

- Shape. Give your Action cards angled or curved corners.

- Background image. Upload an image to use as your Action card’s background. You’ll need to provide separate images for the device’s landscape and portrait modes.

Once you’re happy with your changes, click Save. You can then see your theme in action, by selecting Simulator from the left-hand menu.

Show the Assistant What Your Actions Can Do!

Google is in the process of mapping all the different ways that people can ask for things to a set of built-in intents. These intents are an easy way of letting the Assistant know that your Action can fulfil specific categories of user requests, such as getting a credit score or playing a game. In this way, you can quickly and easily expand the range of phrases that trigger your Actions, without going to the effort of defining those terms explicitly.

A developer preview of the first set of built-in intents is already available, with Google planning to roll out “hundreds of built-in intents in the coming months.”

You can integrate these built-in intents using the Dialogflow Console or the Actions SDK, depending on how you implemented your Actions.

Using Dialogflow

- Head over to the Dialogflow Console.

- Select your agent from the left-hand menu.

- Find Intents in the left-hand menu, and then select its accompanying + icon.

- Click to expand the Events section.

- Select Add Event.

- Select the intent that you want to add.

-

Scroll back to the top of the screen, give your intent a name, and then click Save.

Using the Actions SDK

If you’re using the Actions SDK, then you’ll need to specify the mapping between each built-in intent and the Actions in your Action package, which is a JSON file that you create using the gactions CLI.

For example, here we’re updating the Action package to support the GET_CREDIT_SCORE built-in intent:

{

"actions":[

{

"description":"Welcome Intent",

"name":"MAIN",

"fulfillment":{

"conversationName":"conversation1"

},

"intent":{

"name":"actions.intent.MAIN"

}

},

{

"description":"Get Credit Score",

"name":"GET_CREDIT_SCORE",

"fulfillment":{

"conversationName":"conversation1"

},

"intent":{

"name":"actions.intent.GET_CREDIT_SCORE"

}

}

],

Drive Traffic to Your Actions With Deep Links

You can now generate Action Links, to provide quick and easy access to your app’s Actions. When the user interacts with one of your Action Links on their smartphone or Smart Display, they’ll be taken directly to their Assistant where they can interact with the associated Action. If they interact with one of your Action Links on their Desktop, then they’ll be prompted to select the Assistant-enabled device where they want to access your Action.

To see an example of deep linking, check out this Action Link from the meditation and mindfulness app, Headspace.

To generate an Action Link:

- Head over to the Actions Console.

- Open the project where you want to create your Action Link.

- In the left-hand menu, select Actions.

- Select the Action that you want to generate a link for.

- Scroll down to the Links section, and click to expand.

- Drag the Would you like to enable a URL for this Action? slider, so it’s set to On.

- Give your link a title.

- Scroll to the top of the page, and then click Save.

- Find the URL in the Links section, and then click Copy URL.

You can now use this URL in any location that supports a hyperlink, such as websites, blogs, Facebook, Twitter, YouTube comment sections, and more.

Become Part of Your Users’ Everyday Routines

The most effective way to drive people to your app is to become part of their daily routines. Google Assistant already allows users to execute multiple Actions at once, as part of pre-set routines, but now Google is launching a developer preview of Routine Suggestions.

Once this feature becomes publicly available, you’ll be able to prompt users to add your own Actions to their routines.

Although this feature isn’t ready to be rolled out just yet, you can add Routine Suggestions support to your Actions, ready for when this feature does graduate out of developer preview.

- Head over to the Actions on Google console.

- Select your project.

- In the left-hand menu, select Actions.

- Select the Action where you want to add Routine Suggestions support.

- Scroll to the User engagement section, and then click to expand.

- Push the Would you like to let users add this Action to Google Assistant Routines? slider, so it’s set to On.

- Enter a Content title.

- Scroll back to the top of the screen, and then click Save.

A New Release of the Cross-Platform Flutter Toolkit

If your mobile app is going to reach the widest possible audience, then you’ll need to develop for other platforms besides Android! This has long presented developers with a conundrum: do you build the same app multiple times, or compromise with a cross-platform solution that doesn’t quite deliver the native experience that mobile users have come to expect?

At Google I/O 2017, Google announced Flutter, a UI toolkit that promised to help you write your code once and provide a native experience for both iOS and Android, with widgets that are styled according to Cupertino (iOS) and Material Design (Android) guidelines.

At this year’s event, Google launched the third beta release of Flutter, with new features such as:

- Dart 2 is enabled by default.

- Localisation support, including support for right-to-left languages and mirrored controls.

- More options for building accessible apps, with support for screen readers, large text, and contrast capabilities.

To get started with Flutter, you’ll need to set up Git, if you haven’t already. Once you’ve installed Git, you can get your hands on Flutter by running the following command from a Terminal or Command Prompt window:

git clone -b beta https://github.com/flutter/flutter.git

You can use Flutter with any text editor, but if you install the Flutter and Dart plugins then you can create Flutter apps using Android Studio:

- Launch Android Studio and select Android Studio > Preferences… from the toolbar.

- Select Plugins from the left-hand menu.

- Give the Browse repositories… button a click.

- Search for Flutter, and then click the green Install button.

- When Android Studio prompts you to install Dart, click Yes.

- Restart Android Studio.

You now have access to a selection of Flutter templates, so the easiest way to get a feel for this toolkit is to create a project using one of these templates:

- Select New > New Flutter Project…

- Select the Flutter application template, and then click Next.

- Complete the project setup, as normal.

To run this app:

- Open the Flutter Device Selection dropdown.

- Select your Android Virtual Device (AVD) or physical Android device from the list.

- Select Run > main.dart from the Android Studio toolbar.

This creates a simple app that tracks how many times you’ve tapped a Floating Action Button.

To take a look at the code powering this app, open your project’s flutter_app/java/main.dart file.

Let Google Assistant Book Your Next Hair Appointment

While this technically isn’t something you can add to your apps or start experimenting with today, Google Duplex was one of the most intriguing announcements made during the opening keynote, so it definitely deserves a mention.

While many businesses have an online presence, there are still times when you’ll need to pick up the phone and contact a business directly, especially when you’re dealing with smaller, local businesses.

During the opening keynote, Sundar Pichai announced that Google is testing a new feature that aims to automate tasks that’d typically require you to pick up the phone, such as reserving a table at your favourite restaurant or booking a haircut.

Using this new feature, you’ll just need to specify the date and time when you want to book your appointment, and Google Assistant will then call the business on your behalf. Powered by a new technology called Google Duplex, the Assistant will be able to understand complex sentences and fast speech, and will respond naturally in a telephone conversation, so the person on the other end of the line can talk to Google Assistant as though it were another human being, rather than a computerised voice!

Once Google Assistant has booked your appointment, it’ll even add a reminder to your calendar, so you don’t forget about your haircut or dinner reservations.

Currently, Duplex is restricted to scheduling certain types of appointments, but several clips were played during the Google I/O keynote, and the results are already pretty impressive. You can hear these clips for yourself, over at the Google AI blog.

Behind the scenes, Duplex is a recurrent neural network (RNN), built using the TensorFlow Extended (TFX) machine learning platform and trained using a range of anonymised phone conversation data. The computerised voice varies its intonation based on the context, thanks to a combination of the text-to-speech (TTS) engine and a synthesis TTS engine, using Tacotron and WaveNet—plus the addition of some “hmmm”s and “uh”s and pauses, calculated to help the conversation sound more natural.

As well as being convenient for the user, this technology can help small businesses that rely on bookings but don’t have an online booking system, while also reducing no-shows by reminding customers about their appointments. Duplex also has huge potential to help hearing-impaired users, or people who don’t speak the local language, by completing tasks that would be challenging for them to perform unassisted.

Conclusion

In this article, we covered some of the most noteworthy developer-focused announcements at Google I/O 2018, but there’s plenty more we haven’t touched on! If you want to catch up on all the Google-related news (and you have a few hundred hours to spare) then you can watch all the Google I/O 2018 sessions on YouTube.

Powered by WPeMatico